Semantic Kernel for .NET: a quick look

With Semantic Kernel, we are able to orchestrate or rig up an object that handles calls to popular API services for interacting with Large language models and Small Language Models from OpenAI, Microsoft, Mistral* and Google* (*marked for future releases according to Microsoft at Build 2024).

Semantic Kernel with GPT4o and .NET

In the previous blog, I wrote a small page to fetch and render images on a web page, but they did not have any caption description.

Lets take a look at how we can write descriptive captions with the help of GPT4o vision capabilities and of course text generation capabilities within the context of Semantic Kernel. With Semantic Kernel, we are provided with the scaffolding for inserting user messages as builder pattern methods. The system message and the chat history are also easily available to us to make it simple to add user messages and chatbot messages into the history to maintain context where we need to continuously chat.

Add Semantic Kernel to your project via Nuget:

dotnet add package Microsoft.SemanticKernel --version 1.15.0Then scaffold your Kernel as the following (in this instance, the Kernel will have an OpenAI-specific Chat Completion service attached to it for general chat; there are Azure-specific ones too):

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

public static Kernel GetSemanticKernel()

{

var builder = Kernel.CreateBuilder()

.AddOpenAIChatCompletion("[YOUR_MODEL]", "[YOUR_PROVIDER_DEV_KEY]");

Kernel kernel = builder.Build();

return kernel;

}For Illustrative purposes, you will pass in your modeId/Name and your API key here

GPT4o Vision abilities

We can then arrange our data as the following for Vision capabilities and text generation from our AI model, sending our prompt and image Url on each request via Semantic Kernel, to get a text response:

public async static Task Main(string[] args)

{

List<string> list = new List<string>()

{

//these are mostly art images of red coloured forests "https://i.pinimg.com/originals/5e/86/0e/5e860e89c4460f0be1a572fc7461fbd6.jpg",

"https://img.freepik.com/premium-photo/red-forest-wallpapers-android-iphone-red-forest-wallpaper_759095-18370.jpg",

"https://img.freepik.com/premium-photo/red-forest-with-river-trees-background_915071-1886.jpg",

"https://images.fineartamerica.com/images-medium-large-5/red-forest-tree-landscape-autumn-ben-robson-hull-photography.jpg"

};

var kernel = GetSemanticKernel();

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

var history = new ChatHistory();

foreach (string fileName in list)

{

var imagePrompt = new ChatMessageContentItemCollection() {

new TextContent("please describe in 20 words or less what the image you see is, as if you were a wise and creative mystic"),

new ImageContent( new Uri(fileName ) )

};

history.AddUserMessage(imagePrompt);

var gptDescription =

await chatCompletionService.GetChatMessageContentAsync(history, null, kernel);

Console.WriteLine(gptDescription);

history.AddAssistantMessage(gptDescription.ToString());

}

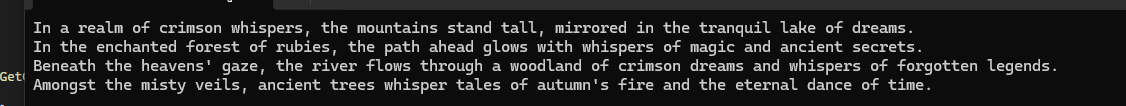

}And you can some nice results coming up:

Model capabilities to the Semantic Kernel can be added in the kernel builder, for example:

public static Kernel GetSemanticKernel()

{

//For OpenAI Text to Speech use tts-1 or tts-1-hd models NOT gpt-4o

var builder = Kernel.CreateBuilder()

.AddOpenAIChatCompletion("[YOUR_MODEL]", "[YOUR_PROVIDER_DEV_KEY]");

.AddOpenAITextToAudio("[YOUR_TTS_MODEL]","[YOUR_PROVIDER_DEV_KEY]")

Kernel kernel = builder.Build();

return kernel;

}Add TTS-1 Model. While GPT4o is 'multi-modal' this multi-modality is currently limited in the sense that the GPT4o model "accepts text or image inputs and outputs text" (from OpenAI). Use tts-1 or tts-1-hd for Text to Audio

Now, adding to the previous code with Text to Speech capabilities, audio playback through the OpenAI Text to Audio Service would appear like the following. This is where the GPT4o generated text is spoken through the TTS model. The updated code then looks like this:

public async static Task Main(string[] args)

{

List<string> list = new List<string>()

{

"https://i.pinimg.com/originals/5e/86/0e/5e860e89c4460f0be1a572fc7461fbd6.jpg",

"https://img.freepik.com/premium-photo/red-forest-wallpapers-android-iphone-red-forest-wallpaper_759095-18370.jpg",

"https://img.freepik.com/premium-photo/red-forest-with-river-trees-background_915071-1886.jpg",

"https://images.fineartamerica.com/images-medium-large-5/red-forest-tree-landscape-autumn-ben-robson-hull-photography.jpg"

};

var kernel = GetSemanticKernel();

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

var ttSService = kernel.GetRequiredService<ITextToAudioService>();

var history = new ChatHistory();

foreach (string imageUrl in list)

{

var imagePrompt = new ChatMessageContentItemCollection() {

new TextContent("please describe in 20 words or less what the image you see is, as if you were a wise and creative mystic"),

new ImageContent( new Uri(imageUrl) )

};

history.AddUserMessage(imagePrompt);

var gptDescription = await chatCompletionService.GetChatMessageContentAsync(history, null, kernel);

history.AddAssistantMessage(gptDescription.ToString());

Console.WriteLine(gptDescription);

var spokenAudio = await ttSService.GetAudioContentsAsync(gptDescription.ToString(), null, kernel);

ReadOnlyMemory<byte>? bytedata = spokenAudio.FirstOrDefault()?.Data;

PlayAudio(bytedata);

}

}Add extra code to perform text to Speech, then playback audio

using NAudio.Wave; //add this nuget package for audio playback classes

private static void PlayAudio(ReadOnlyMemory<byte>? audioData)

{

//the default file format of the audio result from OpenAI is MP3 not WAV!!

using (var memoryStream = new MemoryStream(audioData?.ToArray()))

using (var audioFileReader = new Mp3FileReader(memoryStream))

using (var outputDevice = new WaveOutEvent())

{

outputDevice.Init(audioFileReader);

outputDevice.Play();

while (outputDevice.PlaybackState == PlaybackState.Playing)

{

System.Threading.Thread.Sleep(100);

}

}

}Playback audio from OpenAI TTS service , (Playback classes via NAudio nuget package)

And as a quick result when running, the GPT4o model will generate descriptive text of the image it sees, and the TTS model will speak:

GPT4o and TTS invocation via Semantic Kernel in Action!!