Pushing capability: Azure Blob Storage tested

Back in the time I was undertaking my Condenser project, I was heavily relying on writing to and reading from Azure Blob Storage, uploading videos as blobs, triggering cloud encode processes and sending outputs to an output container, then using a Windows Application to download them, all resources running in the same region.

One thing I thought of for curious fun was how the Windows Application would perform if it was running on a machine that was physically far away from the physical location where the blobs lived (UK West). After all, the Azure storage account I was using was using Locally Redundant Storage (LRS), meaning that all data written into the storage account (including blobs), would only be replicated at least 3 times within the same data center, and crucially not be replicated elsewhere in the world, meaning theoretically it would be difficult for any client app wanting to access this data from a far away location.

This short post seeks to explore briefly my findings on having an application requesting data (blobs) from an Azure Storage account that is physically far away and where replication plays no part in assisting in availability for the requesting client:

Reminder: creating a production-level application that is configured this way is NOT recommended, you should always make sure that the data is located close to where clients/users will be located for optimum performance, this is for exploratory purposes only. If you are perhaps constrained by data sovereignty rules for the storage account (storage account data forced to remain in only geographic area), Azure Files with Azure File Sync might be a pathway to cache some of your data files for quicker access.

The Finding

I decided to spin up a Virtual Machine in Azure, located very far away from the Storage Account.. Australia Central!! 🦘. The Virtual Machine was set as the following:

- size Standard B2ms with 2 cores

- 8GB RAM

- Windows 10

- Standard SSD for storage (SATA SSD)

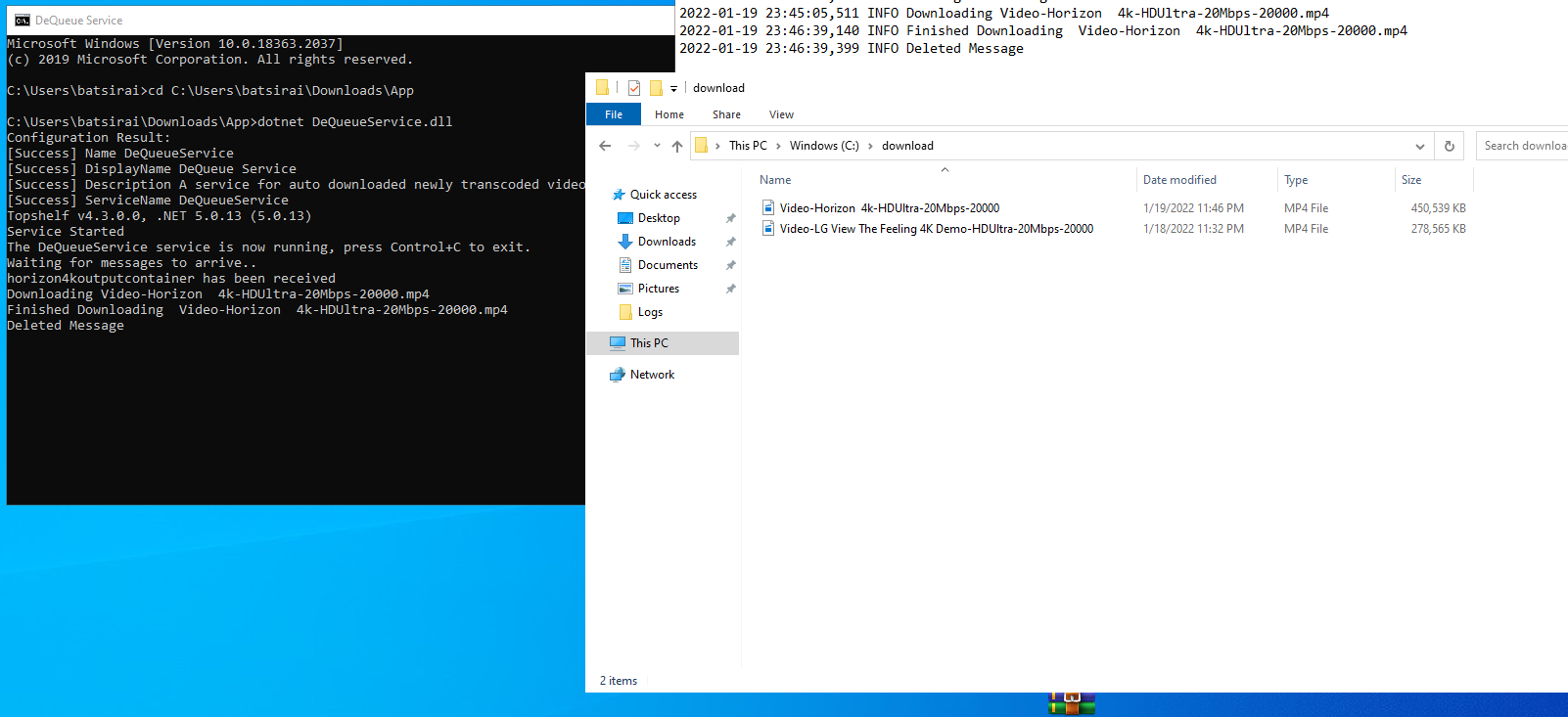

I installed the .NET Runtime on it in order to run the Windows application, copied the existing Windows app to the VM , ran it and like in the Condenser project, the Windows app waited for messages to arrive on the notification message queue to direct it to download a specific blob:

2022-01-18 23:30:49,458 INFO Service Started

2022-01-18 23:30:52,664 INFO Waiting for messages to arrive..

2022-01-18 23:30:55,982 INFO Downloading Video-LG View The Feeling 4K Demo-HDUltra-20Mbps-20000.mp4

2022-01-18 23:32:12,891 INFO Finished Downloading Video-LG View The Feeling 4K Demo-HDUltra-20Mbps-20000.mp4

2022-01-18 23:32:13,151 INFO Deleted MessageThen I uploaded an initial blob in the UK, that would be encoded first, then the output video would be inserted in a container (still in the UK) being watched by the Windows application running in Australia. In the test above, a download of a 274MB video that lived in the UK took approx 1 min 17s to arrive in Australia. Not bad at all and quite remarkable considering that there was no replication in any other region.

And just for good measure, a 450MB download from the UK to Australia took 1min 34s:

Conclusions

The Azure Cloud Global Network of data centers and Edge nodes is reported by Microsoft to be heavily interconnected. This means that even a VM running in any region would still have relatively quick connectivity and bandwidth to access another permitted resource at rest in Azure, even when far away. When choosing a far away location for a VM, I even intentionally skipped picking the United States as this has the most connected and the most data centers so setting up a VM there could have provided a less truthful representation of a client with difficult access to the data. The VM performed well regardless of this 'poor' connectivity. Incredible foresight being shown by the teams that architected these networks!!.

DON'T FORGET TO DELETE THE VM IF NOT NEEDED!!!

Cover Image credit: Brett Sayles on Pexels.