Azure Container Apps with Ollama for general AI inference

An Azure Container Apps instance can run multiple containers under a construct of a main application container that is supported by other 'sidecar' containers. It gives us the perfect environment to create, for example, a web app that has a complimentary Small Language model inference engine in the form of Ollama, where the inference can happen all in private without worries of prompts being used as training data. We are also not limited to just generative text since Ollama can support other modalities such as voice, image and even video as long as compute resources are provided and a capable model is available to do that. The methods discussed here would apply across those modalities too.

[ PLEASE SEE A MUCH EASIER AND SHORTER VERSION OF THIS POST HERE, FEATURING DEEPSEEK . The DeepSeek variant of this post is CLI only compared to the follwing which involves a web app with a UI]

Here, we will get to see how we can containerise an instance of Ollama and get to use it as part of a web app in Azure Container Apps for general text inference with a general non-finetuned model of our choosing.

Update Jan 2025 - (Phi3.5 by Microsoft was originally used in this post but even if you opt to use advanced reasoning models such as DeepSeek-R1, that will still work. Note that the model's data boundary will only be limited to the Azure Container App and never leave the Container).

Prepare Ollama Docker Image for Azure

For the Docker Image, we want to pull the base image first, then hydrate/populate the base image with the models we want to be using after deployment, then commit to create a new image from the pre-populated image. Finally we will push this new image into our container registry (Azure Container Registry).

Pull the base Ollama docker image(this will take some time):

docker pull ollama/ollamaCreate a container and give it a name of your choice(I called mine 'baseollama'). We will also map the internal container port 11434 to our host port on 11434:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name baseollama ollama/ollama

Let's quickly verify there are no images yet in this base image (where there should be no LLMs/SLMs listed):

docker exec -it baseollama ollama list

Now get a powerful Small Language model like Microsoft's Phi3.5 or DeepSeek-R1 for the 'baseollama' image(this will take some time):

docker exec -it baseollama ollama run phi3.5:latest

//if you wish to use DeepSeek perhaps (1.5b parameter model for better performance)

docker exec -it baseollama ollama run deepseek-r1:1.5bAfter the Phi3.5 model is loaded (or any other Ollama compatible model of your choice), we want to commit this hydrated docker image into a new one separately. We will use the new one to exclusively push to a container registry, and continue to modify the base image to our liking locally.

Create a copy of the updated base image:

docker commit baseollama newtestllama

Push new Ollama image to Azure Container Registry

Now we can prepare and push the new Ollama image to Azure Container Registry:

Login to your existing container registry in Azure:

#login into azure and go through the login flow

az login

#login into the specific container registry

az acr login -n <your_registry_name>Tag your new image like the following:

docker tag newtestllama <acr_name>.azurecr.io/<repository_name>:latest

Now push the tag/versioned image to Azure Container Registry (this will take some time):

docker push <acr_name>.azurecr.io/<repository_name>:latest

Wait until the upload process is complete here, but in the meantime, let's take a look into the web app

Use Ollama for ASP.NET Web App

Next, we will prepare an ASP.NET Web app that can take user Input and communicate with Ollama models over through a local API server. This 'local' API server will still apply when the web application is deployed to Azure Container Apps. Here is some rough code to handle a user input that can be sent to Ollama over a local server endpoint and to stream back/sanitise the response back to the page:

namespace AIWebDemoACA.Controllers

{

public class HomeController : Controller

{

private readonly ILogger<HomeController> _logger;

public HomeController(ILogger<HomeController> logger)

{

_logger = logger;

}

public class ResponseModel

{

[JsonProperty("model")]

public string Model { get; set; }

[JsonProperty("created_at")]

public DateTime CreatedAt { get; set; }

[JsonProperty("response")]

public string Response { get; set; }

[JsonProperty("done")]

public bool Done { get; set; }

}

[HttpPost("stream")]

public async IAsyncEnumerable<string> StreamResponse([FromBody]

JsonElement userInput)

{

HttpClient client = new HttpClient();

var textInput =

userInput.TryGetProperty("userInput", out JsonElement inputElement);

string input = inputElement.GetString();

//change model name to deepseek-r1:1.5b for DeepSeek

StringContent content =

new StringContent("{\"model\":\"phi3.5:latest\", \"prompt\":\"" + input + "\", \"stream\": true}", Encoding.UTF8, "application/json");

string streamingAnswer = string.Empty;

var request =

new HttpRequestMessage(HttpMethod.Post, "http://localhost:11434/api/generate");

request.Content = content;

using (var response = await client.SendAsync(request, HttpCompletionOption.ResponseHeadersRead))

{

var responseData = string.Empty;

response.EnsureSuccessStatusCode();

using (var stream = await response.Content.ReadAsStreamAsync())

using (var reader = new StreamReader(stream))

{

while (!reader.EndOfStream)

{

var chunk = await reader.ReadLineAsync();

if (chunk != null)

{

// Process the chunk here

var datachunk =

JsonConvert.DeserializeObject<ResponseModel>(chunk).Response;

List<string> data = new List<string>();

data.Add(datachunk);

foreach (var item in data)

{

await Task.Delay(1);

yield return datachunk;

}

}

}

}

}

}

}

}The controller code for handling streaming data from Small Language model through ollama

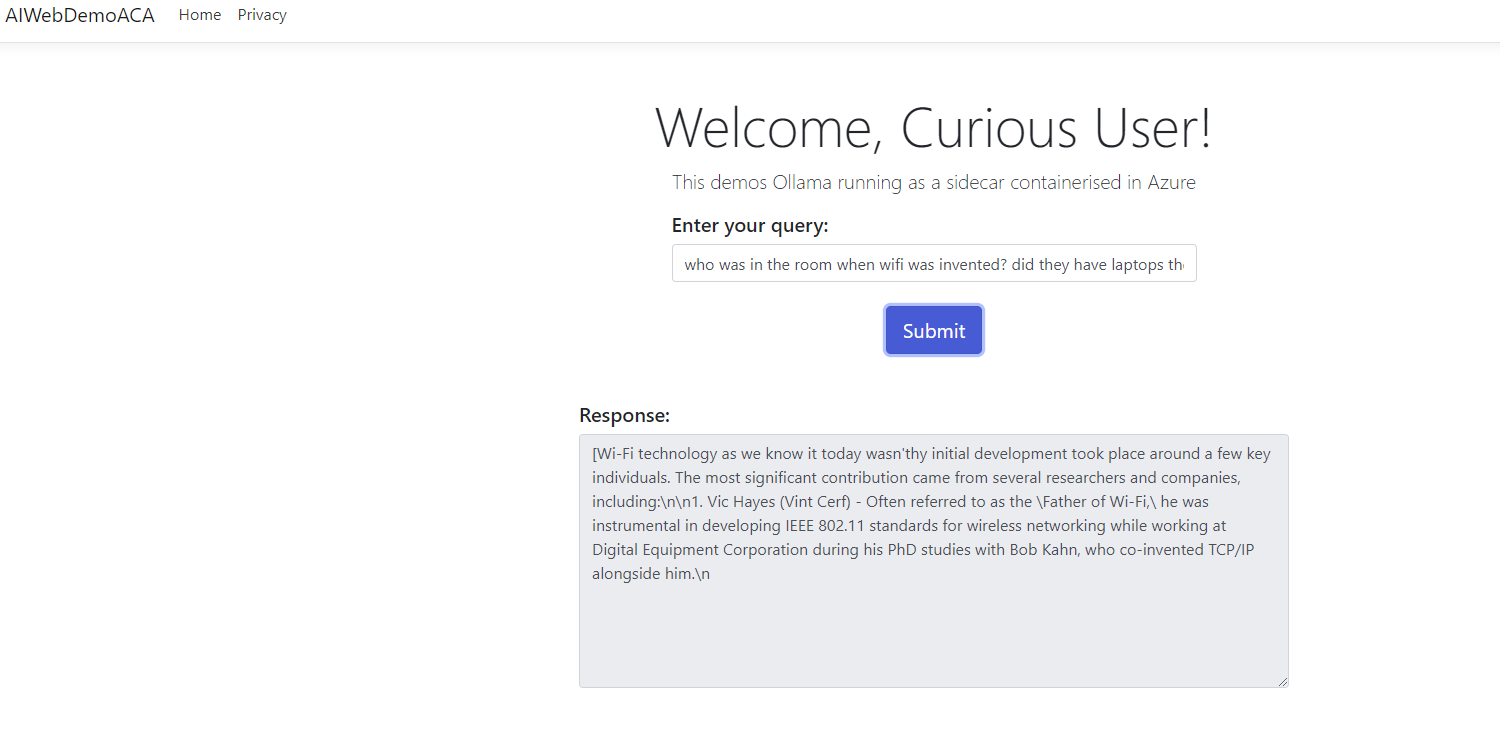

The front end would look like the following:

@{

ViewData["Title"] = "Home Page";

}

<html>

<body>

<link href="https://stackpath.bootstrapcdn.com/bootstrap/4.5.2/css/bootstrap.min.css" rel="stylesheet" />

<div class="container mt-5">

<div class="text-center">

<h2 class="display-4">Welcome, Curious User!</h2>

<p class="lead">This demos Ollama running as a sidecar containerised in Azure</p>

</div>

<div class="row justify-content-center">

<div class="col-md-6">

<div class="form-group">

<label for="userInput" class="h5">Enter your query:</label>

<input type="text" id="userInput" name="userInput" class="form-control" placeholder="Ask away..." />

</div>

<div class="text-center">

<button class="btn btn-primary btn-lg mt-2" onclick="startStreaming()">Submit</button>

</div>

</div>

</div>

<div class="row justify-content-center mt-5">

<div class="col-md-8">

<label for="output" class="h5">Response:</label>

<textarea id="output" class="form-control" readonly rows="10" placeholder="Using Phi3.5 😊 (or DeepSeek)"></textarea>

</div>

</div>

</div>

<script src="https://code.jquery.com/jquery-3.5.1.slim.min.js"></script>

<script src="https://cdn.jsdelivr.net/npm/popperjs/core@2.9.2/dist/umd/popper.min.js"></script>

<script src="https://stackpath.bootstrapcdn.com/bootstrap/4.5.2/js/bootstrap.min.js"></script>

<script>

async function startStreaming() {

var output = document.getElementById('output');

output.value = "";

var userInput = document.getElementById('userInput').value;

try {

const response = await fetch('/stream', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({ userInput: userInput })

});

var reader = response.body.getReader();

var decoder = new TextDecoder();

while (true) {

const { done, value } = await reader.read();

if (done) break;

var chunk = decoder.decode(value, { stream: true });

if (chunk != null && chunk != " ," && chunk != ",") {

output.value += chunk.replace(/"/g, "").replace(",", "");

}

}

output.value += "Stream complete.\n";

} catch (error) {

console.error('Error streaming data:', error);

}

}

function processChunk(chunk) {

try {

var textArray = JSON.parse(chunk);

var regularText = textArray.join("");

var output = document.getElementById('output');

output.value += regularText.replace(/"/g, "");

} catch (error) {

console.error('Error processing chunk:', error);

}

}

</script>

</body>

</html>Frontend code for the web page

The Program.cs file can be as follows:

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddControllersWithViews();

var app = builder.Build();

if (!app.Environment.IsDevelopment())

{

app.UseExceptionHandler("/Home/Error");

app.UseHsts();

}

app.UseHttpsRedirection();

app.UseStaticFiles();

app.UseRouting();

app.UseAuthorization();

app.MapControllerRoute(

name: "default",

pattern: "{controller=Home}/{action=Index}/{id?}");

app.Run();

Program.cs file

Deploy Web App to Azure Container Apps

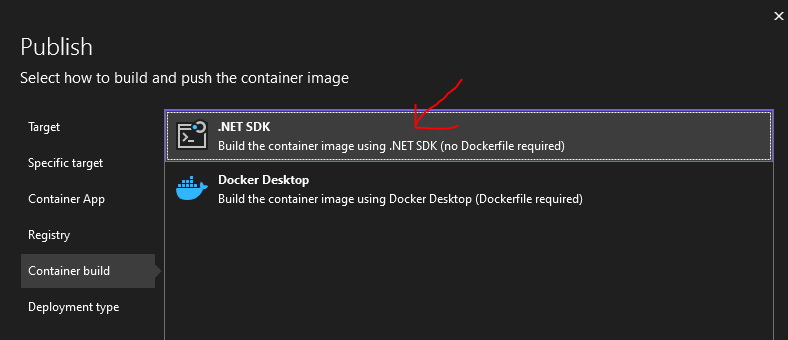

After Building the solution successfully, Right click on the web project, Publish to Azure > Azure Container Apps (Linux) > [Your_New_or_ExistingContainerApp] > [Your_New_or_ExistingRegistry] > .NET SDK Build :

Then select Finish then Publish to publish the web app to Azure Container Apps. This will appear as a single container in the Azure Container App first.

Configure Azure Container App with Ollama container as sidecar

At this point, we have deployed just the web app to Azure Container Apps. We will now bring in our Ollama image in Azure Container Registry as a complimentary sidecar container in the same Azure Container App instance where our web app has been deployed to (The Ollama container does not necessarily have to exist as a separate Azure Container Apps instance).

In our web app code, we set our ollama container to be found at http: //localhost/11434/api/generate . By having our ollama container in the same ACA instance as our web app, it can be discovered by the web app under the same host, meaning that using this localhost endpoint will work correctly, after we set a few parameters in Azure.

Add Ollama as sidecar

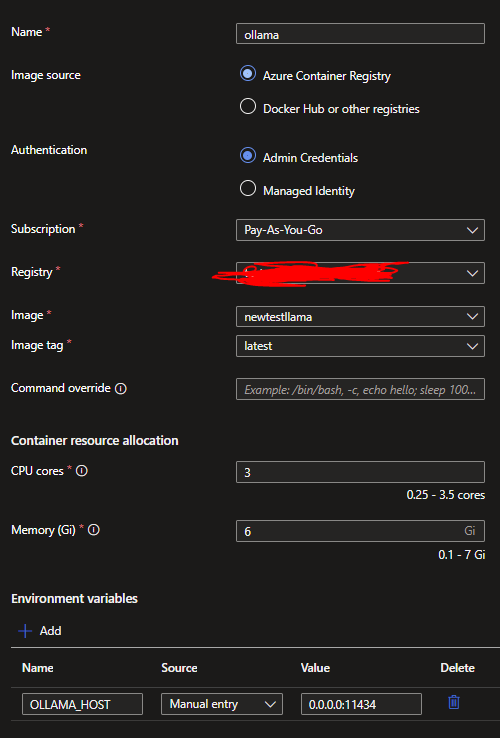

First, go to the Azure Container Instance > Containers > Edit And Deploy > Add Sidecar . Then set the parameter to the following making sure you set an Environment Variable OLLAMA_HOST as below since the Ollama container needs this to operate normally and allow it to serve requests on port 11434. Then click Save:

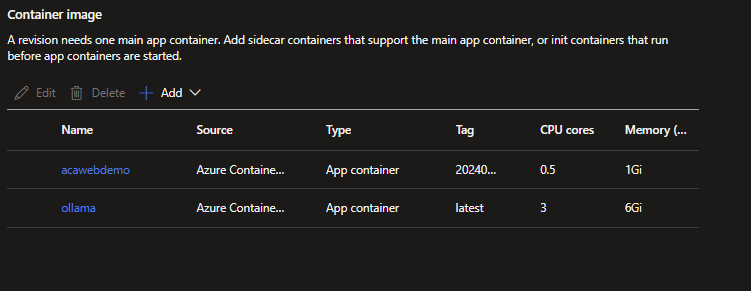

You should now have 2 containers listed as similar to below (if needed, rebalance the CPU and memory between your 2 containers, favouring more resources for the ollama container as it uses much more). Then click Create.

After clicking Create, you will need to wait a few seconds while Azure configures the resources.

Set Ingress for Azure Container App

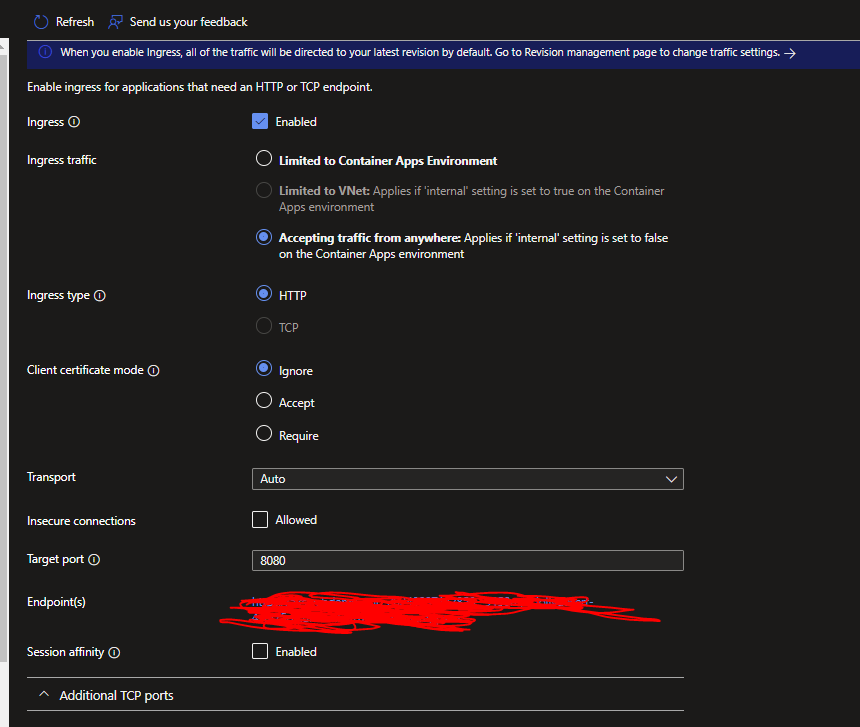

Under the Ingress Settings on your ACA instance, set the following Ingress settings to allow you to view the web page later on, making sure to untick Insecure Connections. Then click Save:

Test Ollama on Azure Container Apps

From the Overview panel, click onto your ApplicationUrl and test the app with the Phi3.5 Model running inside an Ollama container!. Requests to the Language model are completely private and not sent outside of the container:

Performance of Azure Container Apps for Generative AI models

If you require more inference performance and compute resource, please see this page here and request more resources for your Azure subscription.