Azure Container Apps with DeepSeek-R1

The DeepSeek-R1 Large Language Model can easily run on Azure Container Apps for as low compute as 3 CPU cores and 6GB of RAM for a fast experience with the 1.5billion parameter model. You can opt for more CPU and RAM if you choose. The DeepSeek-R1 model comes in multiple parameter sizes (1.5b, 7b, 8b, 14b, 32b , 70b, 671b) that align with more compute requirements, but we will be looking at just the 1.5billion parameter model here with Azure Container Apps and Ollama. If you need a larger size model, it's recommended to increase available compute resources. The benefit we get from using Azure Container Apps compared to using our own machine is the incredible network speeds we have in Azure for downloading various Large language models and their different sizes plus of course, scalable compute from Azure Container Apps.

This blog post is a more concise and much faster method to get up and running with Ollama on Azure Container Apps as previously discussed here. There is no web app associated this time and we will be using the command line to interact with the model.

I will assume the following for the brevity of this post:

- You have an instance of Azure Container Registry

- You are using the Azure CLI (and logged in with az login) or the Cloud Shell in Azure.

Add Ollama to Azure Container Registry

First, we need to populate our container registry with the Ollama Docker image directly using "acr import". There is no need to pull the image onto our machine:

az acr import --name [your_azurecontainerregistry_name].azurecr.io --source docker.io/ollama/ollama:latest --image ollama:latestWait until the Import completes

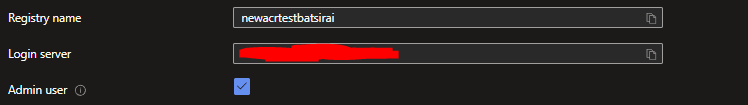

Enable Admin User Credentials for Azure Container Registry

Go to your Container Registry Instance, then Access Keys and make sure Admin user is Checked. This should automatically save:

Enabling the Admin User here will make sure that the Azure Container App in our next step will be able to pull images from this container registry.

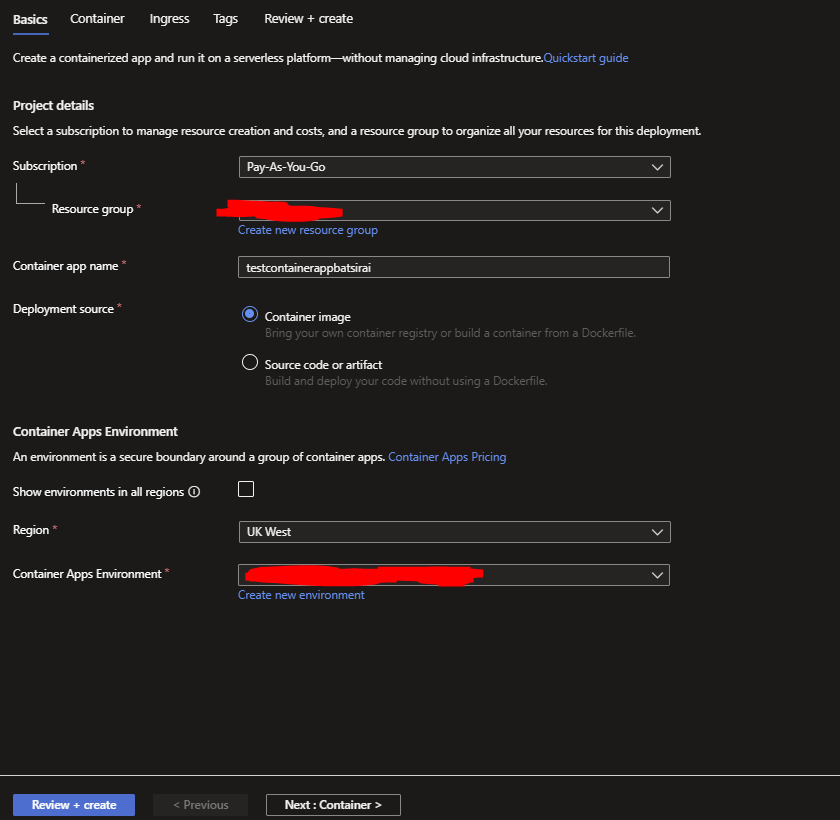

Create Azure Container App

From the Portal, Search and select Container Apps, then Create Container App.

Set your Basic Details

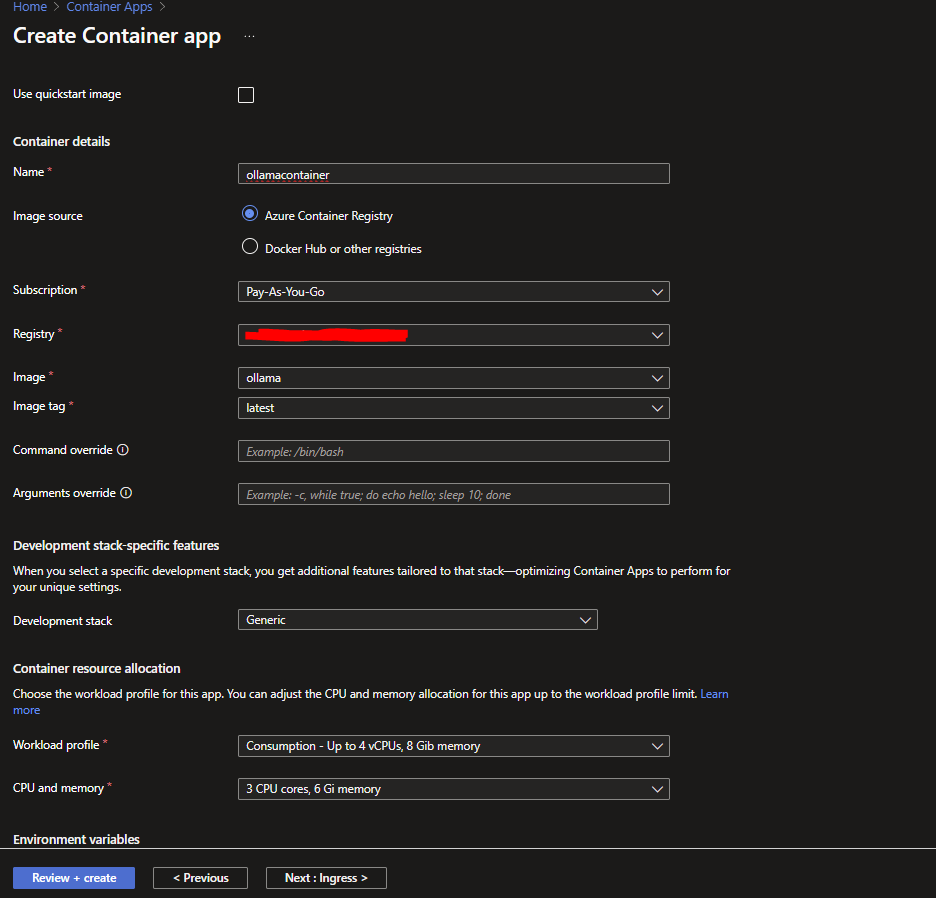

Set your Container details selecting the Ollama image already in the Registry. Select 3 CPU cores and 6GB of RAM for our compute needs. There are NO environment variables required:

Click Review and Create to create an Azure Container App pre-populated with the Ollama Docker image

Run DeepSeek-R1 on Azure Container App

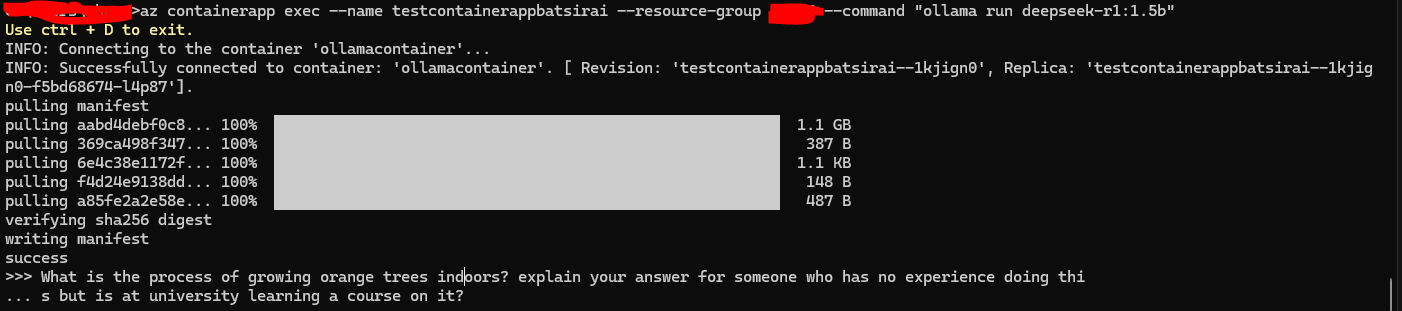

Go back to to your CLI and run:

az containerapp exec --name [your_containerapp_name] --resource-group [your_resource_group] --command "ollama run deepseek-r1:1.5b"Execute an Ollama run command inside the Azure Container App

If the underlying AKS cluster for the Azure Container App disconnects while running the above command, just try again and it should download the R1 model and wait for your prompt after

You will now be in an Interactive session with Ollama now using DeepSeek-R1. And you can ask away as normal, benefiting from fast inference in Azure and scalable compute if you decide to scale up:

REMEMBER TO DELETE UNUSED AZURE RESOURCES WHEN YOU ARE DONE!!