Azure AI Speech Service Fast Transcription

Azure recently updated its Speech to Text service to bring what is known as Fast Transcription. It aims to improve on Speech to Text transcription turn-around as the name describes. It is currently in preview at the time of this writing through a REST endpoint and still comes with the same features that were there before such as profanity masking and speaker diarization. Although largely similar, it should also not be confused with Azure AI Speech Real-time Transcription (which appears to be Microsoft's implementation and hosted service around OpenAI's RealTime API 🤔💭).

Some clarifications:

- Azure AI Speech Realtime Transcription - Azure's service for transcribing 'live' audio as it streams over an Input device.

- Azure AI Speech Fast Transcription - Azure's transcription service for static files that have to be uploaded as a payload to the service (which this post discusses).

- OpenAI RealTime API - OpenAI's state of the art API Service for continuous dialog with their multimodal models with interruption capabilities and tone/sentiment understanding.

We will take a look at how it performs compared to the regular Azure AI Speech to Text Service I wrote about just 7 months ago.

Azure AI Speech Fast Transcription Example

The new updated Speech Service with Fast Transcription works no differently compared to how a standard Azure AI Speech Service call would work. Provide an endpoint using the Fast Transcription specific API, provide a subscription key, provide the audio file as a payload plus request headers and deserialize the response back. The key difference is the inference speed and we will see that shortly:

Using this audio sample (which is from the Last Week In AI podcast):

And using the following code

public static async Task Main(string[] args)

{

var url = "https://[your_azure_ai_services_instances].cognitiveservices.azure.com/speechtotext/transcriptions:transcribe?api-version=2024-05-15-preview";

var subscriptionKey = "[YOUR_SUB_KEY]";

var audioFilePath = "[Path_to_audio]\\podcastwave.mp3";

var stopwatch = new Stopwatch();

var definition = @"

{

""locales"": [""en-US""],

""profanityFilterMode"": ""Masked"",

""channels"": [0, 1]

}";

using (var client = new HttpClient())

{

using (var content = new MultipartFormDataContent())

{

var fileContent = new ByteArrayContent(await File.ReadAllBytesAsync(audioFilePath));

fileContent.Headers.ContentType = MediaTypeHeaderValue.Parse("application/octet-stream");

content.Add(fileContent, "audio", "podcastwave");

content.Add(new StringContent(definition, Encoding.UTF8, "application/json"), "definition");

client.DefaultRequestHeaders.Add("Ocp-Apim-Subscription-Key", subscriptionKey);

client.DefaultRequestHeaders.Accept.Add(new MediaTypeWithQualityHeaderValue("application/json"));

stopwatch.Start();

var response = await client.PostAsync(url, content);

if (response.IsSuccessStatusCode)

{

var result = await response.Content.ReadAsStringAsync();

Console.WriteLine("Response: ");

var transcriptData = JsonConvert.DeserializeObject<FastTranscript>(result);

Console.WriteLine(transcriptData?.combinedPhrases[0].text);

stopwatch.Stop();

var elapsedSeconds = stopwatch.Elapsed.TotalSeconds;

Console.WriteLine($"Transcription took {elapsedSeconds:0.####} seconds");

}

else

{

Console.WriteLine($"Error: {response.StatusCode}");

Console.WriteLine(await response.Content.ReadAsStringAsync());

}

}

}

}Quick HTTP Call to Azure AI Speech Service

public class CombinedPhrase

{

public int channel { get; set; }

public string text { get; set; }

}

public class Phrase

{

public int channel { get; set; }

public int offset { get; set; }

public int duration { get; set; }

public string text { get; set; }

public List<Word> words { get; set; }

public string locale { get; set; }

public double confidence { get; set; }

}

public class FastTranscript

{

public int duration { get; set; }

public List<CombinedPhrase> combinedPhrases { get; set; }

public List<Phrase> phrases { get; set; }

}

public class Word

{

public string text { get; set; }

public int offset { get; set; }

public int duration { get; set; }

}Response Object for returning JSON From Azure AI Speech Service with Fast Transcription

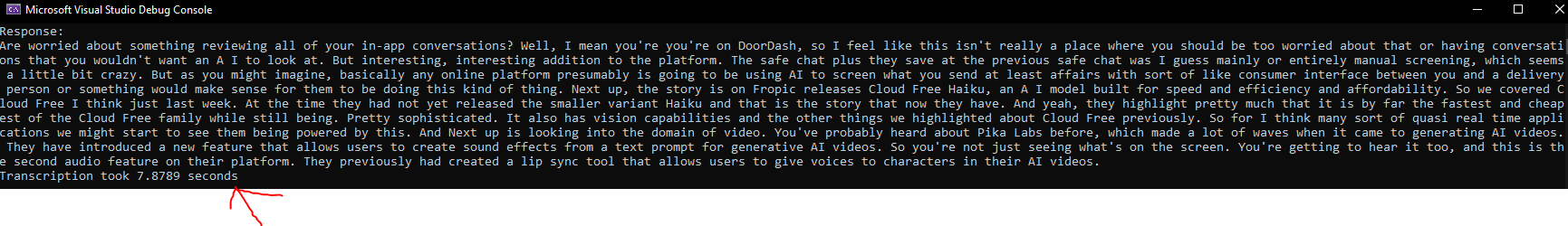

The Output is seen as follows, in an amazing 7.8 seconds of time(an over 8x improvement), compared to 68seconds last time in the context of a Docker container:

The Fast Transcription can be previewed at ai.azure.com

Conclusion

This result of 7.8 seconds for a 2minute clip even beats out the result from OpenAI's standalone Open Source Whisper tiny model as it was in April 2024 which inferred the same audio clip in 13seconds in a previous blog.

It should also be noted that part of the reason inference was taking a long time last time under the conditions of a Azure AI Speech Service Docker Container was likely an artefact of the overheads brought on by the container image itself which was weighing in at 9 to 12GB in size and required 6GB of memory just to operate, However, even giving a whole 25s away to these overheads (leaving us with 48s of actual inference computation) would mean that the standard Azure AI Speech Service was taking at least 20seconds.